❯ brew install rancher

Running `brew update --preinstall`...

==> Auto-updated Homebrew!

Updated 3 taps (fluxcd/tap, homebrew/core and homebrew/cask).

==> New Formulae

bartib ddh sqls

==> Updated Formulae

Updated 281 formulae.

==> New Casks

cnkiexpress fmail2 vertcoin-core windterm

==> Updated Casks

Updated 120 casks.

==> Deleted Casks

biopassfido bootchamp firestormos flip4mac flvcd-bigrats lego-digital-designer multiscan-3b mxsrvs piskel

==> Downloading https://github.com/rancher-sandbox/rancher-desktop/releases/download/v1.2.1/Rancher.Desktop-1.2.1.aarch64.dmg

==> Downloading from https://objects.githubusercontent.com/github-production-release-asset-2e65be/306701996/e808f916-ea8d-4477-8778-0c06b2c686b9?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4C

######################################################################## 100.0%

==> Installing Cask rancher

==> Moving App 'Rancher Desktop.app' to '/Applications/Rancher Desktop.app'

🍺 rancher was successfully installed!

~ via 🐍 v3.9.2 on ☁️ (us-west-2) took 41s

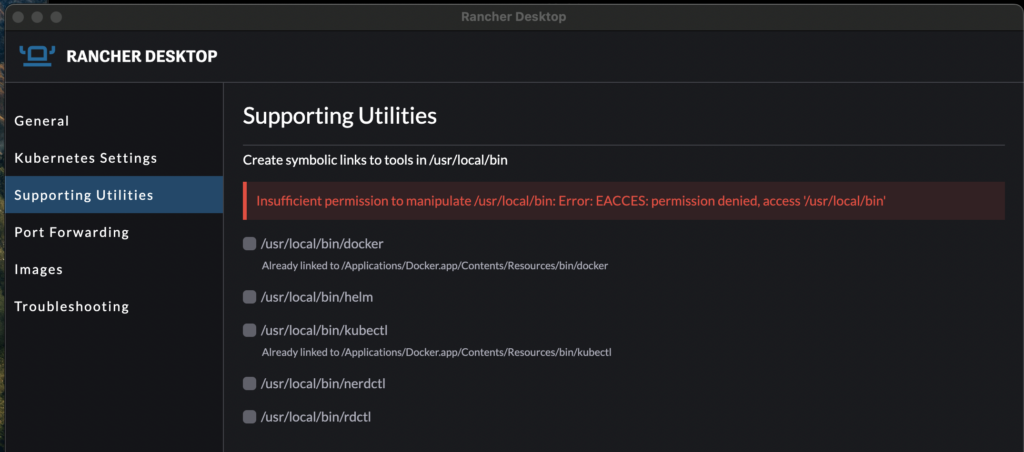

It looks like it wants write permissions to: /usr/local/bin (I need to fix this but capturing the error)

background.log

2022-04-27T00:07:12.747Z: Trying to determine version, can't get output from /Applications/Rancher Desktop.app/Contents/Resources/resources/darwin/bin/kubectl version Error: /Applications/Rancher Desktop.app/Contents/Resources/resources/darwin/bin/kubectl exited with code 1

at ChildProcess.<anonymous> (/Applications/Rancher Desktop.app/Contents/Resources/app.asar/dist/app/background.js:1:8836)

at ChildProcess.emit (node:events:394:28)

at Process.ChildProcess._handle.onexit (node:internal/child_process:290:12) {

stdout: 'Client Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.6", GitCommit:"ad3338546da947756e8a88aa6822e9c11e7eac22", GitTreeState:"clean", BuildDate:"2022-04-14T08:49:13Z", GoVersion:"go1.17.9", Compiler:"gc", Platform:"darwin/amd64"}n'

}

2022-04-27T00:07:12.754Z: Can't get version for kubectl in /Applications/Rancher Desktop.app/Contents/Resources/resources/darwin/bin/kubectl

k3s.log

time=”2022-04-27T00:06:36Z” level=info msg=”Acquiring lock file /var/lib/rancher/k3s/data/.lock”

time=”2022-04-27T00:06:36Z” level=info msg=”Preparing data dir /var/lib/rancher/k3s/data/cefe7380a851b348db6a6b899546b24f9e19b38f3b34eca24bdf84853943b0bb”

time=”2022-04-27T00:06:37Z” level=info msg=”Found ip 192.168.50.195 from iface rd0″

time=”2022-04-27T00:06:37Z” level=info msg=”Starting k3s v1.22.7+k3s1 (8432d7f2)”

time=”2022-04-27T00:06:37Z” level=info msg=”Configuring sqlite3 database connection pooling: maxIdleConns=2, maxOpenConns=0, connMaxLifetime=0s”

time=”2022-04-27T00:06:37Z” level=info msg=”Configuring database table schema and indexes, this may take a moment…”

time=”2022-04-27T00:06:37Z” level=info msg=”Database tables and indexes are up to date”

time=”2022-04-27T00:06:37Z” level=info msg=”Kine available at unix://kine.sock”

time=”2022-04-27T00:06:37Z” level=info msg=”generated self-signed CA certificate CN=k3s-client-ca@1651017997: notBefore=2022-04-27 00:06:37.651277305 +0000 UTC notAfter=2032-04-24 00:06:37.651277305 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=system:admin,O=system:masters signed by CN=k3s-client-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=system:kube-controller-manager signed by CN=k3s-client-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=system:kube-scheduler signed by CN=k3s-client-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=kube-apiserver signed by CN=k3s-client-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=system:kube-proxy signed by CN=k3s-client-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=system:k3s-controller signed by CN=k3s-client-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=k3s-cloud-controller-manager signed by CN=k3s-client-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”generated self-signed CA certificate CN=k3s-server-ca@1651017997: notBefore=2022-04-27 00:06:37.653436472 +0000 UTC notAfter=2032-04-24 00:06:37.653436472 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=kube-apiserver signed by CN=k3s-server-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”generated self-signed CA certificate CN=k3s-request-header-ca@1651017997: notBefore=2022-04-27 00:06:37.653946222 +0000 UTC notAfter=2032-04-24 00:06:37.653946222 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=system:auth-proxy signed by CN=k3s-request-header-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”generated self-signed CA certificate CN=etcd-server-ca@1651017997: notBefore=2022-04-27 00:06:37.654396555 +0000 UTC notAfter=2032-04-24 00:06:37.654396555 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=etcd-server signed by CN=etcd-server-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=etcd-client signed by CN=etcd-server-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”generated self-signed CA certificate CN=etcd-peer-ca@1651017997: notBefore=2022-04-27 00:06:37.655053472 +0000 UTC notAfter=2032-04-24 00:06:37.655053472 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=etcd-peer signed by CN=etcd-peer-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=k3s,O=k3s signed by CN=k3s-server-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”Active TLS secret (ver=) (count 9): map[listener.cattle.io/cn-10.43.0.1:10.43.0.1 listener.cattle.io/cn-127.0.0.1:127.0.0.1 listener.cattle.io/cn-192.168.50.195:192.168.50.195 listener.cattle.io/cn-kubernetes:kubernetes listener.cattle.io/cn-kubernetes.default:kubernetes.default listener.cattle.io/cn-kubernetes.default.svc:kubernetes.default.svc listener.cattle.io/cn-kubernetes.default.svc.cluster.local:kubernetes.default.svc.cluster.local listener.cattle.io/cn-lima-rancher-desktop:lima-rancher-desktop listener.cattle.io/cn-localhost:localhost listener.cattle.io/fingerprint:SHA1=30973A01DF766F8C3E62C41DA4AAB3D1CED3F3F1]”

time=”2022-04-27T00:06:37Z” level=info msg=”Running kube-apiserver –advertise-address=192.168.50.195 –advertise-port=6443 –allow-privileged=true –anonymous-auth=false –api-audiences=https://kubernetes.default.svc.cluster.local,k3s –authorization-mode=Node,RBAC –bind-address=127.0.0.1 –cert-dir=/var/lib/rancher/k3s/server/tls/temporary-certs –client-ca-file=/var/lib/rancher/k3s/server/tls/client-ca.crt –enable-admission-plugins=NodeRestriction –etcd-servers=unix://kine.sock –feature-gates=JobTrackingWithFinalizers=true –insecure-port=0 –kubelet-certificate-authority=/var/lib/rancher/k3s/server/tls/server-ca.crt –kubelet-client-certificate=/var/lib/rancher/k3s/server/tls/client-kube-apiserver.crt –kubelet-client-key=/var/lib/rancher/k3s/server/tls/client-kube-apiserver.key –profiling=false –proxy-client-cert-file=/var/lib/rancher/k3s/server/tls/client-auth-proxy.crt –proxy-client-key-file=/var/lib/rancher/k3s/server/tls/client-auth-proxy.key –requestheader-allowed-names=system:auth-proxy –requestheader-client-ca-file=/var/lib/rancher/k3s/server/tls/request-header-ca.crt –requestheader-extra-headers-prefix=X-Remote-Extra- –requestheader-group-headers=X-Remote-Group –requestheader-username-headers=X-Remote-User –secure-port=6444 –service-account-issuer=https://kubernetes.default.svc.cluster.local –service-account-key-file=/var/lib/rancher/k3s/server/tls/service.key –service-account-signing-key-file=/var/lib/rancher/k3s/server/tls/service.key –service-cluster-ip-range=10.43.0.0/16 –service-node-port-range=30000-32767 –storage-backend=etcd3 –tls-cert-file=/var/lib/rancher/k3s/server/tls/serving-kube-apiserver.crt –tls-private-key-file=/var/lib/rancher/k3s/server/tls/serving-kube-apiserver.key”

Flag –insecure-port has been deprecated, This flag has no effect now and will be removed in v1.24.

I0427 00:06:37.731515 3410 server.go:581] external host was not specified, using 192.168.50.195

I0427 00:06:37.731638 3410 server.go:175] Version: v1.22.7+k3s1

time=”2022-04-27T00:06:37Z” level=info msg=”Running kube-scheduler –authentication-kubeconfig=/var/lib/rancher/k3s/server/cred/scheduler.kubeconfig –authorization-kubeconfig=/var/lib/rancher/k3s/server/cred/scheduler.kubeconfig –bind-address=127.0.0.1 –kubeconfig=/var/lib/rancher/k3s/server/cred/scheduler.kubeconfig –leader-elect=false –profiling=false –secure-port=10259″

time=”2022-04-27T00:06:37Z” level=info msg=”Running kube-controller-manager –allocate-node-cidrs=true –authentication-kubeconfig=/var/lib/rancher/k3s/server/cred/controller.kubeconfig –authorization-kubeconfig=/var/lib/rancher/k3s/server/cred/controller.kubeconfig –bind-address=127.0.0.1 –cluster-cidr=10.42.0.0/16 –cluster-signing-kube-apiserver-client-cert-file=/var/lib/rancher/k3s/server/tls/client-ca.crt –cluster-signing-kube-apiserver-client-key-file=/var/lib/rancher/k3s/server/tls/client-ca.key –cluster-signing-kubelet-client-cert-file=/var/lib/rancher/k3s/server/tls/client-ca.crt –cluster-signing-kubelet-client-key-file=/var/lib/rancher/k3s/server/tls/client-ca.key –cluster-signing-kubelet-serving-cert-file=/var/lib/rancher/k3s/server/tls/server-ca.crt –cluster-signing-kubelet-serving-key-file=/var/lib/rancher/k3s/server/tls/server-ca.key –cluster-signing-legacy-unknown-cert-file=/var/lib/rancher/k3s/server/tls/client-ca.crt –cluster-signing-legacy-unknown-key-file=/var/lib/rancher/k3s/server/tls/client-ca.key –configure-cloud-routes=false –controllers=*,-service,-route,-cloud-node-lifecycle –feature-gates=JobTrackingWithFinalizers=true –kubeconfig=/var/lib/rancher/k3s/server/cred/controller.kubeconfig –leader-elect=false –profiling=false –root-ca-file=/var/lib/rancher/k3s/server/tls/server-ca.crt –secure-port=10257 –service-account-private-key-file=/var/lib/rancher/k3s/server/tls/service.key –use-service-account-credentials=true”

time=”2022-04-27T00:06:37Z” level=info msg=”Running cloud-controller-manager –allocate-node-cidrs=true –authentication-kubeconfig=/var/lib/rancher/k3s/server/cred/cloud-controller.kubeconfig –authorization-kubeconfig=/var/lib/rancher/k3s/server/cred/cloud-controller.kubeconfig –bind-address=127.0.0.1 –cloud-provider=k3s –cluster-cidr=10.42.0.0/16 –configure-cloud-routes=false –kubeconfig=/var/lib/rancher/k3s/server/cred/cloud-controller.kubeconfig –leader-elect=false –node-status-update-frequency=1m0s –port=0 –profiling=false”

time=”2022-04-27T00:06:37Z” level=info msg=”Node token is available at /var/lib/rancher/k3s/server/token”

time=”2022-04-27T00:06:37Z” level=info msg=”To join node to cluster: k3s agent -s https://192.168.5.15:6443 -t ${NODE_TOKEN}”

time=”2022-04-27T00:06:37Z” level=info msg=”Wrote kubeconfig /etc/rancher/k3s/k3s.yaml”

time=”2022-04-27T00:06:37Z” level=info msg=”Run: k3s kubectl”

time=”2022-04-27T00:06:37Z” level=info msg=”Waiting for API server to become available”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=lima-rancher-desktop signed by CN=k3s-server-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”certificate CN=system:node:lima-rancher-desktop,O=system:nodes signed by CN=k3s-client-ca@1651017997: notBefore=2022-04-27 00:06:37 +0000 UTC notAfter=2023-04-27 00:06:37 +0000 UTC”

time=”2022-04-27T00:06:37Z” level=info msg=”Module overlay was already loaded”

time=”2022-04-27T00:06:37Z” level=info msg=”Module nf_conntrack was already loaded”

time=”2022-04-27T00:06:37Z” level=info msg=”Module iptable_nat was already loaded”

time=”2022-04-27T00:06:37Z” level=info msg=”Set sysctl ‘net/netfilter/nf_conntrack_tcp_timeout_established’ to 86400″

time=”2022-04-27T00:06:37Z” level=info msg=”Set sysctl ‘net/netfilter/nf_conntrack_tcp_timeout_close_wait’ to 3600″

time=”2022-04-27T00:06:37Z” level=info msg=”Set sysctl ‘net/ipv4/conf/all/forwarding’ to 1″

time=”2022-04-27T00:06:37Z” level=info msg=”Set sysctl ‘net/netfilter/nf_conntrack_max’ to 131072″

time=”2022-04-27T00:06:37Z” level=info msg=”Connecting to proxy” url=”wss://127.0.0.1:6443/v1-k3s/connect”

time=”2022-04-27T00:06:37Z” level=error msg=”Failed to connect to proxy” error=”websocket: bad handshake”

time=”2022-04-27T00:06:37Z” level=error msg=”Remotedialer proxy error” error=”websocket: bad handshake”

I0427 00:06:38.017323 3410 shared_informer.go:240] Waiting for caches to sync for node_authorizer

I0427 00:06:38.017883 3410 plugins.go:158] Loaded 12 mutating admission controller(s) successfully in the following order: NamespaceLifecycle,LimitRanger,ServiceAccount,NodeRestriction,TaintNodesByCondition,Priority,DefaultTolerationSeconds,DefaultStorageClass,StorageObjectInUseProtection,RuntimeClass,DefaultIngressClass,MutatingAdmissionWebhook.

I0427 00:06:38.017892 3410 plugins.go:161] Loaded 11 validating admission controller(s) successfully in the following order: LimitRanger,ServiceAccount,PodSecurity,Priority,PersistentVolumeClaimResize,RuntimeClass,CertificateApproval,CertificateSigning,CertificateSubjectRestriction,ValidatingAdmissionWebhook,ResourceQuota.

I0427 00:06:38.018464 3410 plugins.go:158] Loaded 12 mutating admission controller(s) successfully in the following order: NamespaceLifecycle,LimitRanger,ServiceAccount,NodeRestriction,TaintNodesByCondition,Priority,DefaultTolerationSeconds,DefaultStorageClass,StorageObjectInUseProtection,RuntimeClass,DefaultIngressClass,MutatingAdmissionWebhook.

I0427 00:06:38.018471 3410 plugins.go:161] Loaded 11 validating admission controller(s) successfully in the following order: LimitRanger,ServiceAccount,PodSecurity,Priority,PersistentVolumeClaimResize,RuntimeClass,CertificateApproval,CertificateSigning,CertificateSubjectRestriction,ValidatingAdmissionWebhook,ResourceQuota.

W0427 00:06:38.026428 3410 genericapiserver.go:455] Skipping API apiextensions.k8s.io/v1beta1 because it has no resources.

I0427 00:06:38.026967 3410 instance.go:278] Using reconciler: lease

I0427 00:06:38.051563 3410 rest.go:130] the default service ipfamily for this cluster is: IPv4

W0427 00:06:38.254657 3410 genericapiserver.go:455] Skipping API authentication.k8s.io/v1beta1 because it has no resources.

W0427 00:06:38.256884 3410 genericapiserver.go:455] Skipping API authorization.k8s.io/v1beta1 because it has no resources.

W0427 00:06:38.266456 3410 genericapiserver.go:455] Skipping API certificates.k8s.io/v1beta1 because it has no resources.

W0427 00:06:38.267652 3410 genericapiserver.go:455] Skipping API coordination.k8s.io/v1beta1 because it has no resources.

W0427 00:06:38.271815 3410 genericapiserver.go:455] Skipping API networking.k8s.io/v1beta1 because it has no resources.

W0427 00:06:38.275295 3410 genericapiserver.go:455] Skipping API node.k8s.io/v1alpha1 because it has no resources.

W0427 00:06:38.284292 3410 genericapiserver.go:455] Skipping API rbac.authorization.k8s.io/v1beta1 because it has no resources.

W0427 00:06:38.284306 3410 genericapiserver.go:455] Skipping API rbac.authorization.k8s.io/v1alpha1 because it has no resources.

W0427 00:06:38.285399 3410 genericapiserver.go:455] Skipping API scheduling.k8s.io/v1beta1 because it has no resources.

W0427 00:06:38.285403 3410 genericapiserver.go:455] Skipping API scheduling.k8s.io/v1alpha1 because it has no resources.

W0427 00:06:38.288626 3410 genericapiserver.go:455] Skipping API storage.k8s.io/v1alpha1 because it has no resources.

W0427 00:06:38.290165 3410 genericapiserver.go:455] Skipping API flowcontrol.apiserver.k8s.io/v1alpha1 because it has no resources.

W0427 00:06:38.295742 3410 genericapiserver.go:455] Skipping API apps/v1beta2 because it has no resources.

W0427 00:06:38.295756 3410 genericapiserver.go:455] Skipping API apps/v1beta1 because it has no resources.

W0427 00:06:38.300609 3410 genericapiserver.go:455] Skipping API admissionregistration.k8s.io/v1beta1 because it has no resources.

I0427 00:06:38.320071 3410 plugins.go:158] Loaded 12 mutating admission controller(s) successfully in the following order: NamespaceLifecycle,LimitRanger,ServiceAccount,NodeRestriction,TaintNodesByCondition,Priority,DefaultTolerationSeconds,DefaultStorageClass,StorageObjectInUseProtection,RuntimeClass,DefaultIngressClass,MutatingAdmissionWebhook.

I0427 00:06:38.320086 3410 plugins.go:161] Loaded 11 validating admission controller(s) successfully in the following order: LimitRanger,ServiceAccount,PodSecurity,Priority,PersistentVolumeClaimResize,RuntimeClass,CertificateApproval,CertificateSigning,CertificateSubjectRestriction,ValidatingAdmissionWebhook,ResourceQuota.

W0427 00:06:38.323524 3410 genericapiserver.go:455] Skipping API apiregistration.k8s.io/v1beta1 because it has no resources.

I0427 00:06:39.007258 3410 dynamic_cafile_content.go:155] “Starting controller” name=”request-header::/var/lib/rancher/k3s/server/tls/request-header-ca.crt”

I0427 00:06:39.007336 3410 dynamic_cafile_content.go:155] “Starting controller” name=”client-ca-bundle::/var/lib/rancher/k3s/server/tls/client-ca.crt”

I0427 00:06:39.007419 3410 secure_serving.go:266] Serving securely on 127.0.0.1:6444

I0427 00:06:39.007444 3410 dynamic_serving_content.go:129] “Starting controller” name=”serving-cert::/var/lib/rancher/k3s/server/tls/serving-kube-apiserver.crt::/var/lib/rancher/k3s/server/tls/serving-kube-apiserver.key”

I0427 00:06:39.007481 3410 tlsconfig.go:240] “Starting DynamicServingCertificateController”

I0427 00:06:39.007893 3410 customresource_discovery_controller.go:209] Starting DiscoveryController

I0427 00:06:39.007911 3410 dynamic_serving_content.go:129] “Starting controller” name=”aggregator-proxy-cert::/var/lib/rancher/k3s/server/tls/client-auth-proxy.crt::/var/lib/rancher/k3s/server/tls/client-auth-proxy.key”

I0427 00:06:39.007917 3410 apf_controller.go:312] Starting API Priority and Fairness config controller

I0427 00:06:39.007926 3410 apiservice_controller.go:97] Starting APIServiceRegistrationController

I0427 00:06:39.007930 3410 cache.go:32] Waiting for caches to sync for APIServiceRegistrationController controller

I0427 00:06:39.007940 3410 autoregister_controller.go:141] Starting autoregister controller

I0427 00:06:39.007942 3410 cache.go:32] Waiting for caches to sync for autoregister controller

I0427 00:06:39.008287 3410 controller.go:85] Starting OpenAPI controller

I0427 00:06:39.008296 3410 naming_controller.go:291] Starting NamingConditionController

I0427 00:06:39.008302 3410 establishing_controller.go:76] Starting EstablishingController

I0427 00:06:39.008307 3410 nonstructuralschema_controller.go:192] Starting NonStructuralSchemaConditionController

I0427 00:06:39.008311 3410 apiapproval_controller.go:186] Starting KubernetesAPIApprovalPolicyConformantConditionController

I0427 00:06:39.008318 3410 crd_finalizer.go:266] Starting CRDFinalizer

I0427 00:06:39.008410 3410 crdregistration_controller.go:111] Starting crd-autoregister controller

I0427 00:06:39.008414 3410 shared_informer.go:240] Waiting for caches to sync for crd-autoregister

I0427 00:06:39.008741 3410 cluster_authentication_trust_controller.go:440] Starting cluster_authentication_trust_controller controller

I0427 00:06:39.008743 3410 shared_informer.go:240] Waiting for caches to sync for cluster_authentication_trust_controller

I0427 00:06:39.008942 3410 available_controller.go:491] Starting AvailableConditionController

I0427 00:06:39.008946 3410 cache.go:32] Waiting for caches to sync for AvailableConditionController controller

I0427 00:06:39.008952 3410 controller.go:83] Starting OpenAPI AggregationController

I0427 00:06:39.010431 3410 dynamic_cafile_content.go:155] “Starting controller” name=”client-ca-bundle::/var/lib/rancher/k3s/server/tls/client-ca.crt”

I0427 00:06:39.010446 3410 dynamic_cafile_content.go:155] “Starting controller” name=”request-header::/var/lib/rancher/k3s/server/tls/request-header-ca.crt”

I0427 00:06:39.043331 3410 controller.go:611] quota admission added evaluator for: namespaces

E0427 00:06:39.048746 3410 controller.go:151] Unable to perform initial Kubernetes service initialization: Service “kubernetes” is invalid: spec.clusterIPs: Invalid value: []string{“10.43.0.1”}: failed to allocate IP 10.43.0.1: cannot allocate resources of type serviceipallocations at this time

E0427 00:06:39.048983 3410 controller.go:156] Unable to remove old endpoints from kubernetes service: StorageError: key not found, Code: 1, Key: /registry/masterleases/192.168.50.195, ResourceVersion: 0, AdditionalErrorMsg:

I0427 00:06:39.108524 3410 shared_informer.go:247] Caches are synced for crd-autoregister

I0427 00:06:39.108559 3410 apf_controller.go:317] Running API Priority and Fairness config worker

I0427 00:06:39.108565 3410 cache.go:39] Caches are synced for autoregister controller

I0427 00:06:39.108751 3410 shared_informer.go:247] Caches are synced for cluster_authentication_trust_controller

I0427 00:06:39.108560 3410 cache.go:39] Caches are synced for APIServiceRegistrationController controller

I0427 00:06:39.109061 3410 cache.go:39] Caches are synced for AvailableConditionController controller

I0427 00:06:39.117424 3410 shared_informer.go:247] Caches are synced for node_authorizer

I0427 00:06:40.007666 3410 controller.go:132] OpenAPI AggregationController: action for item : Nothing (removed from the queue).

I0427 00:06:40.007705 3410 controller.go:132] OpenAPI AggregationController: action for item k8s_internal_local_delegation_chain_0000000000: Nothing (removed from the queue).

I0427 00:06:40.014735 3410 storage_scheduling.go:132] created PriorityClass system-node-critical with value 2000001000

I0427 00:06:40.016728 3410 storage_scheduling.go:132] created PriorityClass system-cluster-critical with value 2000000000

I0427 00:06:40.016735 3410 storage_scheduling.go:148] all system priority classes are created successfully or already exist.

I0427 00:06:40.171859 3410 controller.go:611] quota admission added evaluator for: roles.rbac.authorization.k8s.io

I0427 00:06:40.194530 3410 controller.go:611] quota admission added evaluator for: rolebindings.rbac.authorization.k8s.io

W0427 00:06:40.267334 3410 lease.go:233] Resetting endpoints for master service “kubernetes” to [192.168.50.195]

I0427 00:06:40.267944 3410 controller.go:611] quota admission added evaluator for: endpoints

I0427 00:06:40.270391 3410 controller.go:611] quota admission added evaluator for: endpointslices.discovery.k8s.io

time=”2022-04-27T00:06:41Z” level=info msg=”Kube API server is now running”

time=”2022-04-27T00:06:41Z” level=info msg=”k3s is up and running”

time=”2022-04-27T00:06:41Z” level=info msg=”Waiting for cloud-controller-manager privileges to become available”

time=”2022-04-27T00:06:41Z” level=info msg=”Applying CRD addons.k3s.cattle.io”

time=”2022-04-27T00:06:41Z” level=info msg=”Applying CRD helmcharts.helm.cattle.io”

time=”2022-04-27T00:06:41Z” level=info msg=”Applying CRD helmchartconfigs.helm.cattle.io”

time=”2022-04-27T00:06:41Z” level=info msg=”Waiting for CRD helmcharts.helm.cattle.io to become available”

I0427 00:06:41.196587 3410 serving.go:354] Generated self-signed cert in-memory

I0427 00:06:41.506914 3410 controllermanager.go:186] Version: v1.22.7+k3s1

I0427 00:06:41.508272 3410 secure_serving.go:200] Serving securely on 127.0.0.1:10257

I0427 00:06:41.508869 3410 requestheader_controller.go:169] Starting RequestHeaderAuthRequestController

I0427 00:06:41.508874 3410 shared_informer.go:240] Waiting for caches to sync for RequestHeaderAuthRequestController

I0427 00:06:41.508885 3410 tlsconfig.go:240] “Starting DynamicServingCertificateController”

I0427 00:06:41.508926 3410 configmap_cafile_content.go:201] “Starting controller” name=”client-ca::kube-system::extension-apiserver-authentication::client-ca-file”

I0427 00:06:41.508929 3410 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0427 00:06:41.508937 3410 configmap_cafile_content.go:201] “Starting controller” name=”client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file”

I0427 00:06:41.508940 3410 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

time=”2022-04-27T00:06:41Z” level=info msg=”Done waiting for CRD helmcharts.helm.cattle.io to become available”

time=”2022-04-27T00:06:41Z” level=info msg=”Waiting for CRD helmchartconfigs.helm.cattle.io to become available”

I0427 00:06:41.609911 3410 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0427 00:06:41.609928 3410 shared_informer.go:247] Caches are synced for RequestHeaderAuthRequestController

I0427 00:06:41.609912 3410 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0427 00:06:41.617368 3410 shared_informer.go:240] Waiting for caches to sync for tokens

I0427 00:06:41.622017 3410 controller.go:611] quota admission added evaluator for: serviceaccounts

I0427 00:06:41.623121 3410 controllermanager.go:577] Started “serviceaccount”

I0427 00:06:41.623193 3410 serviceaccounts_controller.go:117] Starting service account controller

I0427 00:06:41.623203 3410 shared_informer.go:240] Waiting for caches to sync for service account

I0427 00:06:41.626082 3410 controllermanager.go:577] Started “cronjob”

I0427 00:06:41.626125 3410 cronjob_controllerv2.go:126] “Starting cronjob controller v2”

I0427 00:06:41.626129 3410 shared_informer.go:240] Waiting for caches to sync for cronjob

I0427 00:06:41.628610 3410 controllermanager.go:577] Started “csrcleaner”

W0427 00:06:41.628614 3410 controllermanager.go:556] “cloud-node-lifecycle” is disabled

I0427 00:06:41.628679 3410 cleaner.go:82] Starting CSR cleaner controller

I0427 00:06:41.631344 3410 controllermanager.go:577] Started “endpointslicemirroring”

I0427 00:06:41.631425 3410 endpointslicemirroring_controller.go:212] Starting EndpointSliceMirroring controller

I0427 00:06:41.631429 3410 shared_informer.go:240] Waiting for caches to sync for endpoint_slice_mirroring

I0427 00:06:41.634909 3410 controllermanager.go:577] Started “replicationcontroller”

I0427 00:06:41.634971 3410 replica_set.go:186] Starting replicationcontroller controller

I0427 00:06:41.634975 3410 shared_informer.go:240] Waiting for caches to sync for ReplicationController

I0427 00:06:41.638888 3410 controllermanager.go:577] Started “garbagecollector”

I0427 00:06:41.639010 3410 garbagecollector.go:142] Starting garbage collector controller

I0427 00:06:41.639015 3410 shared_informer.go:240] Waiting for caches to sync for garbage collector

I0427 00:06:41.639025 3410 graph_builder.go:289] GraphBuilder running

I0427 00:06:41.646332 3410 controllermanager.go:577] Started “horizontalpodautoscaling”

I0427 00:06:41.646389 3410 horizontal.go:169] Starting HPA controller

I0427 00:06:41.646393 3410 shared_informer.go:240] Waiting for caches to sync for HPA

I0427 00:06:41.718688 3410 shared_informer.go:247] Caches are synced for tokens

W0427 00:06:41.828104 3410 shared_informer.go:494] resyncPeriod 13h35m5.463984082s is smaller than resyncCheckPeriod 22h8m28.09140101s and the informer has already started. Changing it to 22h8m28.09140101s

I0427 00:06:41.828159 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for cronjobs.batch

I0427 00:06:41.828251 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for statefulsets.apps

I0427 00:06:41.828277 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for rolebindings.rbac.authorization.k8s.io

I0427 00:06:41.828286 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for roles.rbac.authorization.k8s.io

I0427 00:06:41.828300 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for helmcharts.helm.cattle.io

I0427 00:06:41.828313 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for limitranges

I0427 00:06:41.828321 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for poddisruptionbudgets.policy

I0427 00:06:41.828337 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for daemonsets.apps

I0427 00:06:41.828344 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for jobs.batch

I0427 00:06:41.828351 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for networkpolicies.networking.k8s.io

I0427 00:06:41.828361 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for csistoragecapacities.storage.k8s.io

I0427 00:06:41.828369 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for endpointslices.discovery.k8s.io

I0427 00:06:41.828375 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for podtemplates

I0427 00:06:41.828389 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for helmchartconfigs.helm.cattle.io

I0427 00:06:41.828395 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for addons.k3s.cattle.io

I0427 00:06:41.828408 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for horizontalpodautoscalers.autoscaling

I0427 00:06:41.828421 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for replicasets.apps

I0427 00:06:41.828436 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for ingresses.networking.k8s.io

I0427 00:06:41.828447 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for leases.coordination.k8s.io

I0427 00:06:41.828456 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for controllerrevisions.apps

I0427 00:06:41.828469 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for deployments.apps

I0427 00:06:41.828486 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for events.events.k8s.io

W0427 00:06:41.828491 3410 shared_informer.go:494] resyncPeriod 18h22m13.304888526s is smaller than resyncCheckPeriod 22h8m28.09140101s and the informer has already started. Changing it to 22h8m28.09140101s

I0427 00:06:41.828511 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for serviceaccounts

I0427 00:06:41.828517 3410 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for endpoints

I0427 00:06:41.828534 3410 controllermanager.go:577] Started “resourcequota”

I0427 00:06:41.828556 3410 resource_quota_controller.go:273] Starting resource quota controller

I0427 00:06:41.828571 3410 shared_informer.go:240] Waiting for caches to sync for resource quota

I0427 00:06:41.828591 3410 resource_quota_monitor.go:304] QuotaMonitor running

I0427 00:06:41.971053 3410 controllermanager.go:577] Started “deployment”

W0427 00:06:41.971063 3410 controllermanager.go:556] “bootstrapsigner” is disabled

I0427 00:06:41.971085 3410 deployment_controller.go:153] “Starting controller” controller=”deployment”

I0427 00:06:41.971090 3410 shared_informer.go:240] Waiting for caches to sync for deployment

time=”2022-04-27T00:06:42Z” level=info msg=”Done waiting for CRD helmchartconfigs.helm.cattle.io to become available”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing static file: /var/lib/rancher/k3s/server/static/charts/traefik-10.14.100.tgz”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing static file: /var/lib/rancher/k3s/server/static/charts/traefik-crd-10.14.100.tgz”

time=”2022-04-27T00:06:42Z” level=info msg=”Failed to get existing traefik HelmChart” error=”helmcharts.helm.cattle.io “traefik” not found”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/ccm.yaml”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/coredns.yaml”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/local-storage.yaml”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/metrics-server/metrics-server-deployment.yaml”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/metrics-server/resource-reader.yaml”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/traefik.yaml”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/metrics-server/aggregated-metrics-reader.yaml”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/metrics-server/auth-delegator.yaml”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/metrics-server/auth-reader.yaml”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/metrics-server/metrics-apiservice.yaml”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/metrics-server/metrics-server-service.yaml”

time=”2022-04-27T00:06:42Z” level=info msg=”Writing manifest: /var/lib/rancher/k3s/server/manifests/rolebindings.yaml”

W0427 00:06:42.120637 3410 probe.go:268] Flexvolume plugin directory at /usr/libexec/kubernetes/kubelet-plugins/volume/exec/ does not exist. Recreating.

I0427 00:06:42.125926 3410 controllermanager.go:577] Started “attachdetach”

W0427 00:06:42.125935 3410 controllermanager.go:556] “tokencleaner” is disabled

I0427 00:06:42.125987 3410 attach_detach_controller.go:328] Starting attach detach controller

I0427 00:06:42.125993 3410 shared_informer.go:240] Waiting for caches to sync for attach detach

time=”2022-04-27T00:06:42Z” level=info msg=”Starting k3s.cattle.io/v1, Kind=Addon controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Creating deploy event broadcaster”

time=”2022-04-27T00:06:42Z” level=info msg=”Waiting for control-plane node lima-rancher-desktop startup: nodes “lima-rancher-desktop” not found”

time=”2022-04-27T00:06:42Z” level=info msg=”Cluster dns configmap has been set successfully”

I0427 00:06:42.305175 3410 node_ipam_controller.go:91] Sending events to api server.

time=”2022-04-27T00:06:42Z” level=info msg=”Starting helm.cattle.io/v1, Kind=HelmChart controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Starting helm.cattle.io/v1, Kind=HelmChartConfig controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Starting apps/v1, Kind=Deployment controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Starting apps/v1, Kind=DaemonSet controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Starting rbac.authorization.k8s.io/v1, Kind=ClusterRoleBinding controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Starting batch/v1, Kind=Job controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Starting /v1, Kind=Node controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Starting /v1, Kind=ConfigMap controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Starting /v1, Kind=ServiceAccount controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Starting /v1, Kind=Pod controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Starting /v1, Kind=Service controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Starting /v1, Kind=Endpoints controller”

time=”2022-04-27T00:06:42Z” level=info msg=”Connecting to proxy” url=”wss://127.0.0.1:6443/v1-k3s/connect”

time=”2022-04-27T00:06:42Z” level=info msg=”Handling backend connection request [lima-rancher-desktop]”

time=”2022-04-27T00:06:42Z” level=info msg=”Running kubelet –address=0.0.0.0 –anonymous-auth=false –authentication-token-webhook=true –authorization-mode=Webhook –cgroup-driver=cgroupfs –client-ca-file=/var/lib/rancher/k3s/agent/client-ca.crt –cloud-provider=external –cluster-dns=10.43.0.10 –cluster-domain=cluster.local –cni-bin-dir=/var/lib/rancher/k3s/data/cefe7380a851b348db6a6b899546b24f9e19b38f3b34eca24bdf84853943b0bb/bin –cni-conf-dir=/var/lib/rancher/k3s/agent/etc/cni/net.d –container-runtime-endpoint=unix:///run/k3s/containerd/containerd.sock –container-runtime=remote –containerd=/run/k3s/containerd/containerd.sock –eviction-hard=imagefs.available<5%,nodefs.available<5% –eviction-minimum-reclaim=imagefs.available=10%,nodefs.available=10% –fail-swap-on=false –healthz-bind-address=127.0.0.1 –hostname-override=lima-rancher-desktop –kubeconfig=/var/lib/rancher/k3s/agent/kubelet.kubeconfig –network-plugin=cni –node-ip=192.168.50.195 –node-labels= –pod-manifest-path=/var/lib/rancher/k3s/agent/pod-manifests –read-only-port=0 –resolv-conf=/etc/resolv.conf –serialize-image-pulls=false –tls-cert-file=/var/lib/rancher/k3s/agent/serving-kubelet.crt –tls-private-key-file=/var/lib/rancher/k3s/agent/serving-kubelet.key”

Flag –cloud-provider has been deprecated, will be removed in 1.23, in favor of removing cloud provider code from Kubelet.

Flag –cni-bin-dir has been deprecated, will be removed along with dockershim.

Flag –cni-conf-dir has been deprecated, will be removed along with dockershim.

Flag –containerd has been deprecated, This is a cadvisor flag that was mistakenly registered with the Kubelet. Due to legacy concerns, it will follow the standard CLI deprecation timeline before being removed.

Flag –network-plugin has been deprecated, will be removed along with dockershim.

I0427 00:06:42.856396 3410 server.go:436] “Kubelet version” kubeletVersion=”v1.22.7+k3s1″

time=”2022-04-27T00:06:42Z” level=info msg=”Starting /v1, Kind=Secret controller”

I0427 00:06:42.883299 3410 dynamic_cafile_content.go:155] “Starting controller” name=”client-ca-bundle::/var/lib/rancher/k3s/agent/client-ca.crt”

time=”2022-04-27T00:06:42Z” level=info msg=”Updating TLS secret for k3s-serving (count: 9): map[listener.cattle.io/cn-10.43.0.1:10.43.0.1 listener.cattle.io/cn-127.0.0.1:127.0.0.1 listener.cattle.io/cn-192.168.50.195:192.168.50.195 listener.cattle.io/cn-kubernetes:kubernetes listener.cattle.io/cn-kubernetes.default:kubernetes.default listener.cattle.io/cn-kubernetes.default.svc:kubernetes.default.svc listener.cattle.io/cn-kubernetes.default.svc.cluster.local:kubernetes.default.svc.cluster.local listener.cattle.io/cn-lima-rancher-desktop:lima-rancher-desktop listener.cattle.io/cn-localhost:localhost listener.cattle.io/fingerprint:SHA1=30973A01DF766F8C3E62C41DA4AAB3D1CED3F3F1]”

time=”2022-04-27T00:06:43Z” level=info msg=”Active TLS secret k3s-serving (ver=241) (count 9): map[listener.cattle.io/cn-10.43.0.1:10.43.0.1 listener.cattle.io/cn-127.0.0.1:127.0.0.1 listener.cattle.io/cn-192.168.50.195:192.168.50.195 listener.cattle.io/cn-kubernetes:kubernetes listener.cattle.io/cn-kubernetes.default:kubernetes.default listener.cattle.io/cn-kubernetes.default.svc:kubernetes.default.svc listener.cattle.io/cn-kubernetes.default.svc.cluster.local:kubernetes.default.svc.cluster.local listener.cattle.io/cn-lima-rancher-desktop:lima-rancher-desktop listener.cattle.io/cn-localhost:localhost listener.cattle.io/fingerprint:SHA1=30973A01DF766F8C3E62C41DA4AAB3D1CED3F3F1]”

time=”2022-04-27T00:06:43Z” level=info msg=”Running kube-proxy –cluster-cidr=10.42.0.0/16 –conntrack-max-per-core=0 –conntrack-tcp-timeout-close-wait=0s –conntrack-tcp-timeout-established=0s –healthz-bind-address=127.0.0.1 –hostname-override=lima-rancher-desktop –kubeconfig=/var/lib/rancher/k3s/agent/kubeproxy.kubeconfig –proxy-mode=iptables”

W0427 00:06:43.107857 3410 server.go:224] WARNING: all flags other than –config, –write-config-to, and –cleanup are deprecated. Please begin using a config file ASAP.

E0427 00:06:43.143019 3410 node.go:161] Failed to retrieve node info: nodes “lima-rancher-desktop” not found

time=”2022-04-27T00:06:43Z” level=info msg=”Waiting for control-plane node lima-rancher-desktop startup: nodes “lima-rancher-desktop” not found”

I0427 00:06:44.227460 3410 controller.go:611] quota admission added evaluator for: addons.k3s.cattle.io

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”ccm”, UID:”a4f9c788-25b3-4838-8318-5cdbced8899a”, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”243″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/ccm.yaml””

E0427 00:06:44.235553 3410 node.go:161] Failed to retrieve node info: nodes “lima-rancher-desktop” not found

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”ccm”, UID:”a4f9c788-25b3-4838-8318-5cdbced8899a”, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”243″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/ccm.yaml””

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”coredns”, UID:”a20ed8dd-79ce-48ee-b480-1319e7b19d04″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”251″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/coredns.yaml””

I0427 00:06:44.339043 3410 controller.go:611] quota admission added evaluator for: deployments.apps

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”coredns”, UID:”a20ed8dd-79ce-48ee-b480-1319e7b19d04″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”251″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/coredns.yaml””

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”local-storage”, UID:”f04a59dc-eee7-4f97-9254-9cb95edb7b55″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”264″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/local-storage.yaml””

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”local-storage”, UID:”f04a59dc-eee7-4f97-9254-9cb95edb7b55″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”264″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/local-storage.yaml””

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”aggregated-metrics-reader”, UID:”c8d8bcae-e8ee-412e-9b58-66c3bf827010″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”276″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/aggregated-metrics-reader.yaml””

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”aggregated-metrics-reader”, UID:”c8d8bcae-e8ee-412e-9b58-66c3bf827010″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”276″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/aggregated-metrics-reader.yaml””

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”auth-delegator”, UID:”12f392c0-3364-494e-984d-b63a6ad74205″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”281″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/auth-delegator.yaml””

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”auth-delegator”, UID:”12f392c0-3364-494e-984d-b63a6ad74205″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”281″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/auth-delegator.yaml””

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”auth-reader”, UID:”c1e4cc83-6043-4db3-a0ac-6c64d50671f3″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”286″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/auth-reader.yaml””

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”auth-reader”, UID:”c1e4cc83-6043-4db3-a0ac-6c64d50671f3″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”286″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/auth-reader.yaml””

time=”2022-04-27T00:06:44Z” level=info msg=”Waiting for control-plane node lima-rancher-desktop startup: nodes “lima-rancher-desktop” not found”

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”metrics-apiservice”, UID:”33699bc7-9012-4352-a3a6-e52b2c7080ca”, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”291″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/metrics-apiservice.yaml””

time=”2022-04-27T00:06:44Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”metrics-apiservice”, UID:”33699bc7-9012-4352-a3a6-e52b2c7080ca”, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”291″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/metrics-apiservice.yaml””

time=”2022-04-27T00:06:45Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”metrics-server-deployment”, UID:”a730adae-b10e-4af4-b8d9-af6d1c06de85″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”297″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/metrics-server-deployment.yaml””

time=”2022-04-27T00:06:45Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”metrics-server-deployment”, UID:”a730adae-b10e-4af4-b8d9-af6d1c06de85″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”297″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/metrics-server-deployment.yaml””

I0427 00:06:45.337389 3410 serving.go:354] Generated self-signed cert in-memory

time=”2022-04-27T00:06:45Z” level=info msg=”Waiting for control-plane node lima-rancher-desktop startup: nodes “lima-rancher-desktop” not found”

time=”2022-04-27T00:06:45Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”metrics-server-service”, UID:”55f26111-4a25-4d23-ac32-491db3c39a0d”, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”305″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/metrics-server-service.yaml””

time=”2022-04-27T00:06:45Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”metrics-server-service”, UID:”55f26111-4a25-4d23-ac32-491db3c39a0d”, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”305″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/metrics-server-service.yaml””

I0427 00:06:45.599929 3410 controllermanager.go:142] Version: v1.22.7+k3s1

I0427 00:06:45.602220 3410 secure_serving.go:200] Serving securely on 127.0.0.1:10258

I0427 00:06:45.602277 3410 tlsconfig.go:240] “Starting DynamicServingCertificateController”

I0427 00:06:45.602312 3410 requestheader_controller.go:169] Starting RequestHeaderAuthRequestController

I0427 00:06:45.602324 3410 shared_informer.go:240] Waiting for caches to sync for RequestHeaderAuthRequestController

I0427 00:06:45.602368 3410 configmap_cafile_content.go:201] “Starting controller” name=”client-ca::kube-system::extension-apiserver-authentication::client-ca-file”

I0427 00:06:45.602372 3410 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0427 00:06:45.602381 3410 configmap_cafile_content.go:201] “Starting controller” name=”client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file”

I0427 00:06:45.602383 3410 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0427 00:06:45.702960 3410 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0427 00:06:45.702982 3410 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0427 00:06:45.703001 3410 shared_informer.go:247] Caches are synced for RequestHeaderAuthRequestController

W0427 00:06:45.735722 3410 handler_proxy.go:104] no RequestInfo found in the context

E0427 00:06:45.735785 3410 controller.go:116] loading OpenAPI spec for “v1beta1.metrics.k8s.io” failed with: failed to retrieve openAPI spec, http error: ResponseCode: 503, Body: service unavailable

, Header: map[Content-Type:[text/plain; charset=utf-8] X-Content-Type-Options:[nosniff]]

I0427 00:06:45.735795 3410 controller.go:129] OpenAPI AggregationController: action for item v1beta1.metrics.k8s.io: Rate Limited Requeue.

time=”2022-04-27T00:06:45Z” level=warning msg=”Unable to watch for tunnel endpoints: unknown (get endpoints)”

time=”2022-04-27T00:06:45Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”resource-reader”, UID:”0d2ce913-30ee-47e5-90cb-8ca8787e45a7″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”312″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/resource-reader.yaml””

time=”2022-04-27T00:06:45Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”resource-reader”, UID:”0d2ce913-30ee-47e5-90cb-8ca8787e45a7″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”312″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/metrics-server/resource-reader.yaml””

time=”2022-04-27T00:06:46Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”rolebindings”, UID:”7cbd004f-59c8-4c9b-8409-0b04a72d079c”, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”318″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/rolebindings.yaml””

time=”2022-04-27T00:06:46Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”rolebindings”, UID:”7cbd004f-59c8-4c9b-8409-0b04a72d079c”, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”318″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/rolebindings.yaml””

time=”2022-04-27T00:06:46Z” level=info msg=”Waiting for control-plane node lima-rancher-desktop startup: nodes “lima-rancher-desktop” not found”

E0427 00:06:46.548434 3410 node.go:161] Failed to retrieve node info: nodes “lima-rancher-desktop” not found

time=”2022-04-27T00:06:46Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”traefik”, UID:”2c8fc2cc-a2ce-472c-8bde-6b4aadc401c2″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”325″, FieldPath:””}): type: ‘Normal’ reason: ‘ApplyingManifest’ Applying manifest at “/var/lib/rancher/k3s/server/manifests/traefik.yaml””

I0427 00:06:46.748145 3410 controller.go:611] quota admission added evaluator for: helmcharts.helm.cattle.io

time=”2022-04-27T00:06:46Z” level=info msg=”Event(v1.ObjectReference{Kind:”Addon”, Namespace:”kube-system”, Name:”traefik”, UID:”2c8fc2cc-a2ce-472c-8bde-6b4aadc401c2″, APIVersion:”k3s.cattle.io/v1″, ResourceVersion:”325″, FieldPath:””}): type: ‘Normal’ reason: ‘AppliedManifest’ Applied manifest at “/var/lib/rancher/k3s/server/manifests/traefik.yaml””

I0427 00:06:46.820787 3410 controller.go:611] quota admission added evaluator for: jobs.batch

I0427 00:06:46.859212 3410 request.go:665] Waited for 1.000732334s due to client-side throttling, not priority and fairness, request: GET:https://127.0.0.1:6444/apis/storage.k8s.io/v1beta1?timeout=32s

E0427 00:06:47.417880 3410 controllermanager.go:419] unable to get all supported resources from server: unable to retrieve the complete list of server APIs: metrics.k8s.io/v1beta1: the server is currently unable to handle the request

I0427 00:06:47.443003 3410 node_controller.go:115] Sending events to api server.

I0427 00:06:47.448120 3410 controllermanager.go:285] Started “cloud-node”

I0427 00:06:47.448164 3410 node_controller.go:154] Waiting for informer caches to sync

I0427 00:06:47.462998 3410 node_lifecycle_controller.go:76] Sending events to api server

I0427 00:06:47.463091 3410 controllermanager.go:285] Started “cloud-node-lifecycle”

time=”2022-04-27T00:06:47Z” level=info msg=”Waiting for control-plane node lima-rancher-desktop startup: nodes “lima-rancher-desktop” not found”

I0427 00:06:47.907846 3410 server.go:687] “–cgroups-per-qos enabled, but –cgroup-root was not specified. defaulting to /”

I0427 00:06:47.908642 3410 container_manager_linux.go:280] “Container manager verified user specified cgroup-root exists” cgroupRoot=[]

I0427 00:06:47.908725 3410 container_manager_linux.go:285] “Creating Container Manager object based on Node Config” nodeConfig={RuntimeCgroupsName: SystemCgroupsName: KubeletCgroupsName: ContainerRuntime:remote CgroupsPerQOS:true CgroupRoot:/ CgroupDriver:cgroupfs KubeletRootDir:/var/lib/kubelet ProtectKernelDefaults:false NodeAllocatableConfig:{KubeReservedCgroupName: SystemReservedCgroupName: ReservedSystemCPUs: EnforceNodeAllocatable:map[pods:{}] KubeReserved:map[] SystemReserved:map[] HardEvictionThresholds:[{Signal:imagefs.available Operator:LessThan Value:{Quantity: Percentage:0.05} GracePeriod:0s MinReclaim:} {Signal:nodefs.available Operator:LessThan Value:{Quantity: Percentage:0.05} GracePeriod:0s MinReclaim:}]} QOSReserved:map[] ExperimentalCPUManagerPolicy:none ExperimentalCPUManagerPolicyOptions:map[] ExperimentalTopologyManagerScope:container ExperimentalCPUManagerReconcilePeriod:10s ExperimentalMemoryManagerPolicy:None ExperimentalMemoryManagerReservedMemory:[] ExperimentalPodPidsLimit:-1 EnforceCPULimits:true CPUCFSQuotaPeriod:100ms ExperimentalTopologyManagerPolicy:none}

I0427 00:06:47.908971 3410 topology_manager.go:133] “Creating topology manager with policy per scope” topologyPolicyName=”none” topologyScopeName=”container”

I0427 00:06:47.908981 3410 container_manager_linux.go:320] “Creating device plugin manager” devicePluginEnabled=true

I0427 00:06:47.909187 3410 state_mem.go:36] “Initialized new in-memory state store”

I0427 00:06:47.915742 3410 kubelet.go:418] “Attempting to sync node with API server”

I0427 00:06:47.915771 3410 kubelet.go:279] “Adding static pod path” path=”/var/lib/rancher/k3s/agent/pod-manifests”

I0427 00:06:47.916031 3410 kubelet.go:290] “Adding apiserver pod source”

I0427 00:06:47.916066 3410 apiserver.go:42] “Waiting for node sync before watching apiserver pods”

I0427 00:06:47.924359 3410 apiserver.go:52] “Watching apiserver”

I0427 00:06:47.937056 3410 kuberuntime_manager.go:245] “Container runtime initialized” containerRuntime=”containerd” version=”v1.5.8″ apiVersion=”v1alpha2″

I0427 00:06:47.973018 3410 server.go:1213] “Started kubelet”

I0427 00:06:47.976713 3410 fs_resource_analyzer.go:67] “Starting FS ResourceAnalyzer”

I0427 00:06:47.979409 3410 server.go:149] “Starting to listen” address=”0.0.0.0″ port=10250

I0427 00:06:47.980633 3410 server.go:409] “Adding debug handlers to kubelet server”

W0427 00:06:47.983448 3410 oomparser.go:173] error reading /dev/kmsg: read /dev/kmsg: broken pipe

E0427 00:06:47.983465 3410 oomparser.go:149] exiting analyzeLines. OOM events will not be reported.

I0427 00:06:47.983860 3410 volume_manager.go:291] “Starting Kubelet Volume Manager”

I0427 00:06:47.987349 3410 desired_state_of_world_populator.go:146] “Desired state populator starts to run”

E0427 00:06:47.988785 3410 cri_stats_provider.go:372] “Failed to get the info of the filesystem with mountpoint” err=”unable to find data in memory cache” mountpoint=”/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs”

E0427 00:06:47.988808 3410 kubelet.go:1343] “Image garbage collection failed once. Stats initialization may not have completed yet” err=”invalid capacity 0 on image filesystem”

W0427 00:06:47.999836 3410 oomparser.go:173] error reading /dev/kmsg: read /dev/kmsg: broken pipe

E0427 00:06:47.999845 3410 oomparser.go:149] exiting analyzeLines. OOM events will not be reported.

E0427 00:06:48.010598 3410 nodelease.go:49] “Failed to get node when trying to set owner ref to the node lease” err=”nodes “lima-rancher-desktop” not found” node=”lima-rancher-desktop”

I0427 00:06:48.011082 3410 cpu_manager.go:209] “Starting CPU manager” policy=”none”

I0427 00:06:48.011090 3410 cpu_manager.go:210] “Reconciling” reconcilePeriod=”10s”

I0427 00:06:48.011098 3410 state_mem.go:36] “Initialized new in-memory state store”

I0427 00:06:48.027063 3410 kubelet_network_linux.go:56] “Initialized protocol iptables rules.” protocol=IPv4

I0427 00:06:48.066613 3410 policy_none.go:49] “None policy: Start”

I0427 00:06:48.067101 3410 memory_manager.go:168] “Starting memorymanager” policy=”None”

I0427 00:06:48.067118 3410 state_mem.go:35] “Initializing new in-memory state store”

E0427 00:06:48.084768 3410 kubelet.go:2412] “Error getting node” err=”node “lima-rancher-desktop” not found”

I0427 00:06:48.085702 3410 kubelet_node_status.go:71] “Attempting to register node” node=”lima-rancher-desktop”

I0427 00:06:48.092044 3410 node_controller.go:390] Initializing node lima-rancher-desktop with cloud provider

E0427 00:06:48.092082 3410 node_controller.go:212] error syncing ‘lima-rancher-desktop’: failed to get provider ID for node lima-rancher-desktop at cloudprovider: failed to get instance ID from cloud provider: address annotations not yet set, requeuing

I0427 00:06:48.092207 3410 kubelet_node_status.go:74] “Successfully registered node” node=”lima-rancher-desktop”

time=”2022-04-27T00:06:48Z” level=info msg=”Updated coredns node hosts entry [192.168.50.195 lima-rancher-desktop]”

I0427 00:06:48.097111 3410 node_controller.go:390] Initializing node lima-rancher-desktop with cloud provider

E0427 00:06:48.097140 3410 node_controller.go:212] error syncing ‘lima-rancher-desktop’: failed to get provider ID for node lima-rancher-desktop at cloudprovider: failed to get instance ID from cloud provider: address annotations not yet set, requeuing

time=”2022-04-27T00:06:48Z” level=info msg=”Failed to update node lima-rancher-desktop: Operation cannot be fulfilled on nodes “lima-rancher-desktop”: the object has been modified; please apply your changes to the latest version and try again”

I0427 00:06:48.106875 3410 node_controller.go:390] Initializing node lima-rancher-desktop with cloud provider

E0427 00:06:48.106901 3410 node_controller.go:212] error syncing ‘lima-rancher-desktop’: failed to get provider ID for node lima-rancher-desktop at cloudprovider: failed to get instance ID from cloud provider: address annotations not yet set, requeuing

I0427 00:06:48.107528 3410 node_controller.go:390] Initializing node lima-rancher-desktop with cloud provider

E0427 00:06:48.107548 3410 node_controller.go:212] error syncing ‘lima-rancher-desktop’: failed to get provider ID for node lima-rancher-desktop at cloudprovider: failed to get instance ID from cloud provider: address annotations not yet set, requeuing

time=”2022-04-27T00:06:48Z” level=info msg=”labels have been set successfully on node: lima-rancher-desktop”

time=”2022-04-27T00:06:48Z” level=info msg=”Starting flannel with backend vxlan”

I0427 00:06:48.117994 3410 node_controller.go:390] Initializing node lima-rancher-desktop with cloud provider

I0427 00:06:48.136053 3410 kubelet_network_linux.go:56] “Initialized protocol iptables rules.” protocol=IPv6

I0427 00:06:48.136071 3410 status_manager.go:158] “Starting to sync pod status with apiserver”

I0427 00:06:48.136081 3410 kubelet.go:1967] “Starting kubelet main sync loop”

E0427 00:06:48.136118 3410 kubelet.go:1991] “Skipping pod synchronization” err=”[container runtime status check may not have completed yet, PLEG is not healthy: pleg has yet to be successful]”

I0427 00:06:48.143044 3410 manager.go:609] “Failed to read data from checkpoint” checkpoint=”kubelet_internal_checkpoint” err=”checkpoint is not found”

I0427 00:06:48.143205 3410 plugin_manager.go:114] “Starting Kubelet Plugin Manager”

I0427 00:06:48.203622 3410 node_controller.go:454] Successfully initialized node lima-rancher-desktop with cloud provider

I0427 00:06:48.203697 3410 event.go:291] “Event occurred” object=”lima-rancher-desktop” kind=”Node” apiVersion=”v1″ type=”Normal” reason=”Synced” message=”Node synced successfully”

I0427 00:06:48.288932 3410 reconciler.go:157] “Reconciler: start to sync state”

I0427 00:06:48.437088 3410 serving.go:354] Generated self-signed cert in-memory

time=”2022-04-27T00:06:48Z” level=info msg=”Labels and annotations have been set successfully on node: lima-rancher-desktop”

W0427 00:06:48.723219 3410 authorization.go:47] Authorization is disabled

W0427 00:06:48.723228 3410 authentication.go:47] Authentication is disabled

I0427 00:06:48.723236 3410 deprecated_insecure_serving.go:54] Serving healthz insecurely on [::]:10251

I0427 00:06:48.725126 3410 requestheader_controller.go:169] Starting RequestHeaderAuthRequestController

I0427 00:06:48.725140 3410 shared_informer.go:240] Waiting for caches to sync for RequestHeaderAuthRequestController

I0427 00:06:48.725155 3410 configmap_cafile_content.go:201] “Starting controller” name=”client-ca::kube-system::extension-apiserver-authentication::client-ca-file”

I0427 00:06:48.725160 3410 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0427 00:06:48.725167 3410 configmap_cafile_content.go:201] “Starting controller” name=”client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file”

I0427 00:06:48.725169 3410 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0427 00:06:48.725264 3410 secure_serving.go:200] Serving securely on 127.0.0.1:10259

I0427 00:06:48.725299 3410 tlsconfig.go:240] “Starting DynamicServingCertificateController”

I0427 00:06:48.825207 3410 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0427 00:06:48.825230 3410 shared_informer.go:247] Caches are synced for RequestHeaderAuthRequestController

I0427 00:06:48.825238 3410 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

time=”2022-04-27T00:06:50Z” level=info msg=”Stopped tunnel to 127.0.0.1:6443″

time=”2022-04-27T00:06:50Z” level=info msg=”Connecting to proxy” url=”wss://192.168.50.195:6443/v1-k3s/connect”

time=”2022-04-27T00:06:50Z” level=info msg=”Proxy done” err=”context canceled” url=”wss://127.0.0.1:6443/v1-k3s/connect”

time=”2022-04-27T00:06:50Z” level=info msg=”error in remotedialer server [400]: websocket: close 1006 (abnormal closure): unexpected EOF”

time=”2022-04-27T00:06:50Z” level=info msg=”Handling backend connection request [lima-rancher-desktop]”

I0427 00:06:51.293408 3410 node.go:172] Successfully retrieved node IP: 192.168.50.195

I0427 00:06:51.293438 3410 server_others.go:140] Detected node IP 192.168.50.195

I0427 00:06:51.294507 3410 server_others.go:206] kube-proxy running in dual-stack mode, IPv4-primary

I0427 00:06:51.294529 3410 server_others.go:212] Using iptables Proxier.

I0427 00:06:51.294535 3410 server_others.go:219] creating dualStackProxier for iptables.

W0427 00:06:51.294544 3410 server_others.go:495] detect-local-mode set to ClusterCIDR, but no IPv6 cluster CIDR defined, , defaulting to no-op detect-local for IPv6

I0427 00:06:51.294811 3410 server.go:649] Version: v1.22.7+k3s1

I0427 00:06:51.296023 3410 config.go:315] Starting service config controller

I0427 00:06:51.296028 3410 shared_informer.go:240] Waiting for caches to sync for service config

I0427 00:06:51.296036 3410 config.go:224] Starting endpoint slice config controller

I0427 00:06:51.296038 3410 shared_informer.go:240] Waiting for caches to sync for endpoint slice config

I0427 00:06:51.301150 3410 controller.go:611] quota admission added evaluator for: events.events.k8s.io

I0427 00:06:51.396377 3410 shared_informer.go:247] Caches are synced for service config

I0427 00:06:51.396404 3410 shared_informer.go:247] Caches are synced for endpoint slice config

I0427 00:06:52.318921 3410 range_allocator.go:82] Sending events to api server.

I0427 00:06:52.319037 3410 range_allocator.go:110] No Service CIDR provided. Skipping filtering out service addresses.

I0427 00:06:52.319041 3410 range_allocator.go:116] No Secondary Service CIDR provided. Skipping filtering out secondary service addresses.

I0427 00:06:52.319071 3410 controllermanager.go:577] Started “nodeipam”

W0427 00:06:52.319075 3410 controllermanager.go:556] “route” is disabled

I0427 00:06:52.319136 3410 node_ipam_controller.go:154] Starting ipam controller

I0427 00:06:52.319140 3410 shared_informer.go:240] Waiting for caches to sync for node

I0427 00:06:52.320612 3410 node_lifecycle_controller.go:377] Sending events to api server.

I0427 00:06:52.320711 3410 taint_manager.go:163] “Sending events to api server”

I0427 00:06:52.320742 3410 node_lifecycle_controller.go:505] Controller will reconcile labels.

I0427 00:06:52.320767 3410 controllermanager.go:577] Started “nodelifecycle”

I0427 00:06:52.320873 3410 node_lifecycle_controller.go:539] Starting node controller

I0427 00:06:52.320876 3410 shared_informer.go:240] Waiting for caches to sync for taint

I0427 00:06:52.324160 3410 controllermanager.go:577] Started “persistentvolume-expander”

I0427 00:06:52.324244 3410 expand_controller.go:327] Starting expand controller

I0427 00:06:52.324250 3410 shared_informer.go:240] Waiting for caches to sync for expand

I0427 00:06:52.327306 3410 controllermanager.go:577] Started “clusterrole-aggregation”

I0427 00:06:52.327354 3410 clusterroleaggregation_controller.go:194] Starting ClusterRoleAggregator

I0427 00:06:52.327357 3410 shared_informer.go:240] Waiting for caches to sync for ClusterRoleAggregator

I0427 00:06:52.333364 3410 controllermanager.go:577] Started “ephemeral-volume”

I0427 00:06:52.333462 3410 controller.go:170] Starting ephemeral volume controller

I0427 00:06:52.333466 3410 shared_informer.go:240] Waiting for caches to sync for ephemeral

I0427 00:06:52.335636 3410 controllermanager.go:577] Started “csrsigning”

I0427 00:06:52.335700 3410 certificate_controller.go:118] Starting certificate controller “csrsigning-legacy-unknown”

I0427 00:06:52.335704 3410 shared_informer.go:240] Waiting for caches to sync for certificate-csrsigning-legacy-unknown

I0427 00:06:52.335724 3410 certificate_controller.go:118] Starting certificate controller “csrsigning-kubelet-serving”

I0427 00:06:52.335726 3410 shared_informer.go:240] Waiting for caches to sync for certificate-csrsigning-kubelet-serving

I0427 00:06:52.335748 3410 certificate_controller.go:118] Starting certificate controller “csrsigning-kubelet-client”

I0427 00:06:52.335752 3410 shared_informer.go:240] Waiting for caches to sync for certificate-csrsigning-kubelet-client

I0427 00:06:52.335763 3410 certificate_controller.go:118] Starting certificate controller “csrsigning-kube-apiserver-client”

I0427 00:06:52.335764 3410 shared_informer.go:240] Waiting for caches to sync for certificate-csrsigning-kube-apiserver-client

I0427 00:06:52.335787 3410 dynamic_serving_content.go:129] “Starting controller” name=”csr-controller::/var/lib/rancher/k3s/server/tls/client-ca.crt::/var/lib/rancher/k3s/server/tls/client-ca.key”

I0427 00:06:52.335806 3410 dynamic_serving_content.go:129] “Starting controller” name=”csr-controller::/var/lib/rancher/k3s/server/tls/server-ca.crt::/var/lib/rancher/k3s/server/tls/server-ca.key”

I0427 00:06:52.335818 3410 dynamic_serving_content.go:129] “Starting controller” name=”csr-controller::/var/lib/rancher/k3s/server/tls/client-ca.crt::/var/lib/rancher/k3s/server/tls/client-ca.key”

I0427 00:06:52.335837 3410 dynamic_serving_content.go:129] “Starting controller” name=”csr-controller::/var/lib/rancher/k3s/server/tls/client-ca.crt::/var/lib/rancher/k3s/server/tls/client-ca.key”

I0427 00:06:52.339019 3410 controllermanager.go:577] Started “ttl”

I0427 00:06:52.339067 3410 ttl_controller.go:121] Starting TTL controller

I0427 00:06:52.339070 3410 shared_informer.go:240] Waiting for caches to sync for TTL

I0427 00:06:52.342071 3410 controllermanager.go:577] Started “pvc-protection”

I0427 00:06:52.342170 3410 pvc_protection_controller.go:110] “Starting PVC protection controller”

I0427 00:06:52.342175 3410 shared_informer.go:240] Waiting for caches to sync for PVC protection

I0427 00:06:52.345377 3410 controllermanager.go:577] Started “ttl-after-finished”

I0427 00:06:52.345431 3410 ttlafterfinished_controller.go:109] Starting TTL after finished controller

I0427 00:06:52.345436 3410 shared_informer.go:240] Waiting for caches to sync for TTL after finished

I0427 00:06:52.349777 3410 controllermanager.go:577] Started “endpoint”

I0427 00:06:52.349837 3410 endpoints_controller.go:195] Starting endpoint controller

I0427 00:06:52.349842 3410 shared_informer.go:240] Waiting for caches to sync for endpoint

I0427 00:06:52.352990 3410 controllermanager.go:577] Started “podgc”

I0427 00:06:52.353043 3410 gc_controller.go:89] Starting GC controller

I0427 00:06:52.353047 3410 shared_informer.go:240] Waiting for caches to sync for GC

I0427 00:06:52.360323 3410 controllermanager.go:577] Started “job”

I0427 00:06:52.360346 3410 job_controller.go:172] Starting job controller

I0427 00:06:52.360350 3410 shared_informer.go:240] Waiting for caches to sync for job

I0427 00:06:52.560089 3410 controllermanager.go:577] Started “disruption”

I0427 00:06:52.560127 3410 disruption.go:363] Starting disruption controller

I0427 00:06:52.560131 3410 shared_informer.go:240] Waiting for caches to sync for disruption

I0427 00:06:52.710759 3410 controllermanager.go:577] Started “persistentvolume-binder”

I0427 00:06:52.710789 3410 pv_controller_base.go:308] Starting persistent volume controller

I0427 00:06:52.710793 3410 shared_informer.go:240] Waiting for caches to sync for persistent volume

I0427 00:06:52.860809 3410 controllermanager.go:577] Started “pv-protection”

I0427 00:06:52.860883 3410 pv_protection_controller.go:83] Starting PV protection controller

I0427 00:06:52.860889 3410 shared_informer.go:240] Waiting for caches to sync for PV protection

I0427 00:06:53.010975 3410 controllermanager.go:577] Started “root-ca-cert-publisher”

I0427 00:06:53.011002 3410 publisher.go:107] Starting root CA certificate configmap publisher

I0427 00:06:53.011005 3410 shared_informer.go:240] Waiting for caches to sync for crt configmap

I0427 00:06:53.160800 3410 controllermanager.go:577] Started “endpointslice”

I0427 00:06:53.160879 3410 endpointslice_controller.go:257] Starting endpoint slice controller

I0427 00:06:53.160883 3410 shared_informer.go:240] Waiting for caches to sync for endpoint_slice

I0427 00:06:53.310830 3410 controllermanager.go:577] Started “daemonset”

I0427 00:06:53.310862 3410 daemon_controller.go:284] Starting daemon sets controller

I0427 00:06:53.310866 3410 shared_informer.go:240] Waiting for caches to sync for daemon sets

I0427 00:06:53.359915 3410 controllermanager.go:577] Started “csrapproving”

W0427 00:06:53.359924 3410 controllermanager.go:556] “service” is disabled

I0427 00:06:53.359943 3410 certificate_controller.go:118] Starting certificate controller “csrapproving”

I0427 00:06:53.359947 3410 shared_informer.go:240] Waiting for caches to sync for certificate-csrapproving

E0427 00:06:53.616926 3410 namespaced_resources_deleter.go:161] unable to get all supported resources from server: unable to retrieve the complete list of server APIs: metrics.k8s.io/v1beta1: the server is currently unable to handle the request

I0427 00:06:53.616971 3410 controllermanager.go:577] Started “namespace”

I0427 00:06:53.617013 3410 namespace_controller.go:200] Starting namespace controller

I0427 00:06:53.617017 3410 shared_informer.go:240] Waiting for caches to sync for namespace

I0427 00:06:53.761482 3410 controllermanager.go:577] Started “replicaset”

I0427 00:06:53.761511 3410 replica_set.go:186] Starting replicaset controller

I0427 00:06:53.761515 3410 shared_informer.go:240] Waiting for caches to sync for ReplicaSet

I0427 00:06:53.910723 3410 controllermanager.go:577] Started “statefulset”

I0427 00:06:53.910838 3410 stateful_set.go:148] Starting stateful set controller

I0427 00:06:53.910843 3410 shared_informer.go:240] Waiting for caches to sync for stateful set

I0427 00:06:53.914746 3410 shared_informer.go:240] Waiting for caches to sync for resource quota

I0427 00:06:53.926914 3410 job_controller.go:406] enqueueing job kube-system/helm-install-traefik-crd

I0427 00:06:53.927509 3410 shared_informer.go:247] Caches are synced for ClusterRoleAggregator

I0427 00:06:53.927853 3410 job_controller.go:406] enqueueing job kube-system/helm-install-traefik

W0427 00:06:53.927886 3410 actual_state_of_world.go:534] Failed to update statusUpdateNeeded field in actual state of world: Failed to set statusUpdateNeeded to needed true, because nodeName=”lima-rancher-desktop” does not exist

I0427 00:06:53.931655 3410 shared_informer.go:247] Caches are synced for endpoint_slice_mirroring

I0427 00:06:53.935227 3410 shared_informer.go:247] Caches are synced for ReplicationController

E0427 00:06:53.935521 3410 memcache.go:196] couldn’t get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

I0427 00:06:53.935717 3410 shared_informer.go:247] Caches are synced for certificate-csrsigning-legacy-unknown

I0427 00:06:53.935739 3410 shared_informer.go:247] Caches are synced for certificate-csrsigning-kubelet-serving

I0427 00:06:53.935758 3410 shared_informer.go:247] Caches are synced for certificate-csrsigning-kubelet-client

I0427 00:06:53.935771 3410 shared_informer.go:247] Caches are synced for certificate-csrsigning-kube-apiserver-client

E0427 00:06:53.936321 3410 memcache.go:101] couldn’t get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

I0427 00:06:53.938806 3410 shared_informer.go:240] Waiting for caches to sync for garbage collector

I0427 00:06:53.939116 3410 shared_informer.go:247] Caches are synced for TTL

I0427 00:06:53.946364 3410 shared_informer.go:247] Caches are synced for TTL after finished

I0427 00:06:53.950711 3410 shared_informer.go:247] Caches are synced for endpoint

I0427 00:06:53.954008 3410 shared_informer.go:247] Caches are synced for GC

I0427 00:06:53.959996 3410 shared_informer.go:247] Caches are synced for certificate-csrapproving

I0427 00:06:53.961170 3410 shared_informer.go:247] Caches are synced for endpoint_slice

I0427 00:06:53.961182 3410 shared_informer.go:247] Caches are synced for disruption

I0427 00:06:53.961185 3410 disruption.go:371] Sending events to api server.